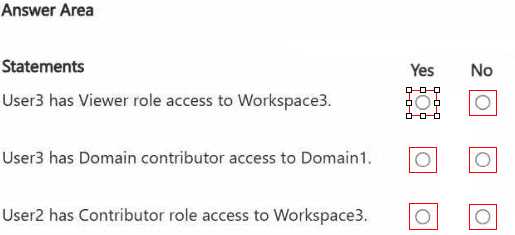

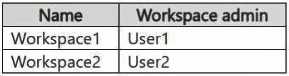

HOTSPOT You have three users named User1, User2, and User3. You have the Fabric workspaces shown in the following table.

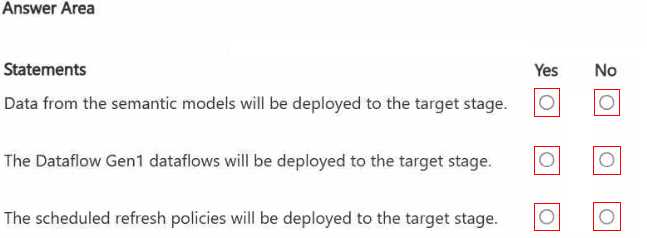

HOTSPOT You have a Fabric workspace named Workspace1_DEV that contains the following items: 10 reports Four notebooks Three lakehouses Two data pipelines Two Dataflow Gen1 dataflows Three Dataflow Gen2 dataflows Five semantic models that each has a scheduled refresh policy You create a deployment pipeline named Pipeline1 to move items from Workspace1_DEV to a new workspace named Workspace1_TEST. You deploy all the items from Workspace1_DEV to Workspace1_TEST. For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

All three are No here. Deployment pipelines just move metadata, not actual data or schedules. Official docs and practice exams back this up if you want to double-check.

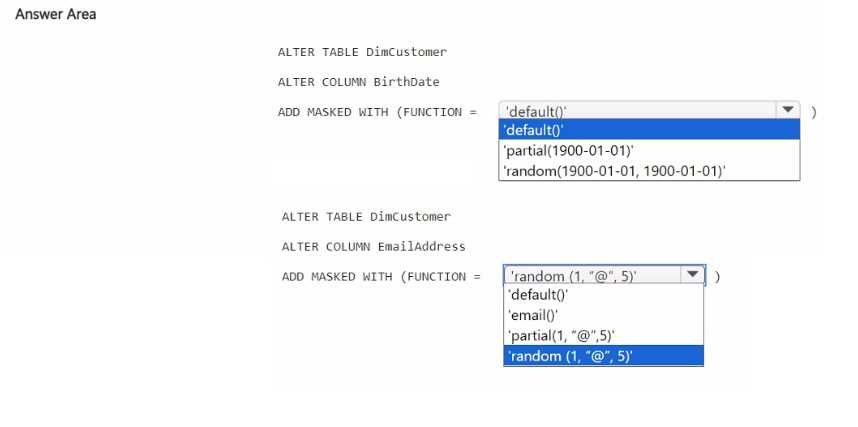

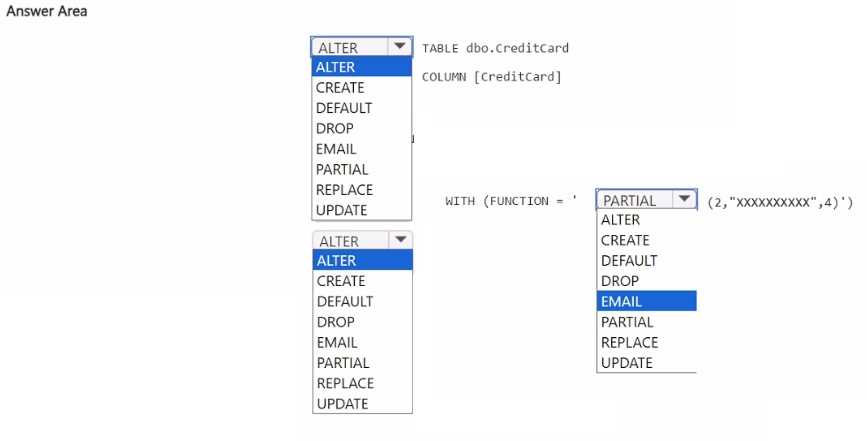

HOTSPOT You have a Fabric workspace that contains a warehouse named Warehouse!. Warehousel contains a table named DimCustomers. DimCustomers contains the following columns: • CustomerName • CustomerlD • BirthDate • Email You need to configure security to meet the following requirements: • BirthDate in DimCustomer must be masked and display 1900-01-01. • Email in DimCustomer must be masked and display only the first leading character and the last five characters. How should you complete the statement? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

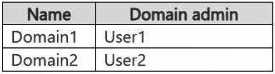

HOTSPOT You are processing streaming data from an external data provider. You have the following code segment.

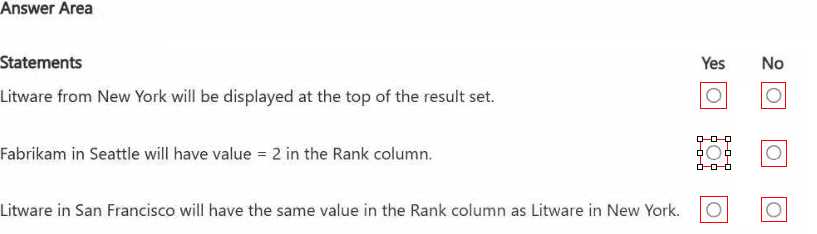

No for the first one since Location is sorted descending, so Washington DC comes first, not New York. Fabrikam in Seattle does get rank 2 because Contoso is higher. Litware in San Francisco and New York both have rank 1 for their respective partitions. I think some might trip over the sort order here.

HOTSPOT You have a Fabric workspace named Workspace1 that contains a warehouse named Warehouse2. A team of data analysts has Viewer role access to Workspace1. You create a table by running the following statement.

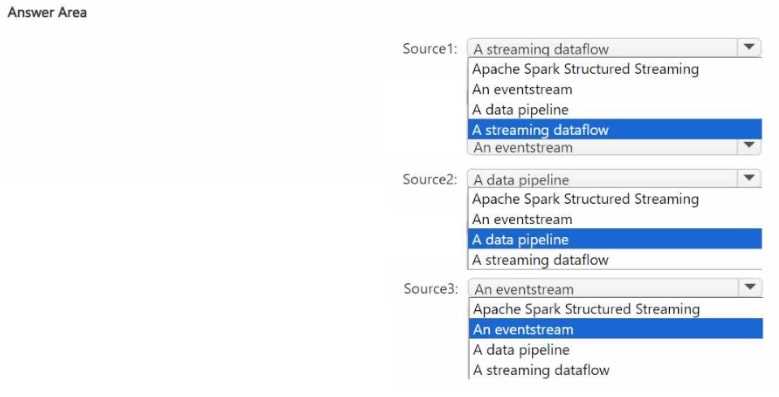

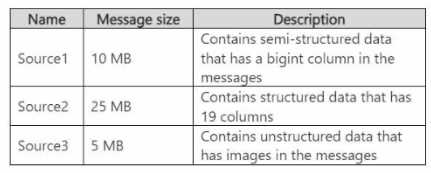

HOTSPOT You need to recommend a Fabric streaming solution that will use the sources shown in the following table.

All three sources have message sizes way over the 1 MB cap for Eventstream, so Apache Spark Structured Streaming is the only fit here, even if it needs more code. If minimizing dev effort was stricter than message size support, I might say Eventstream-so is there any chance smaller messages are allowed or is >1 MB guaranteed?

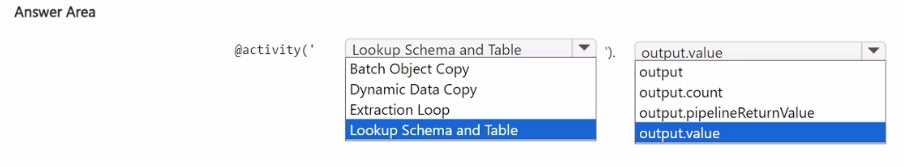

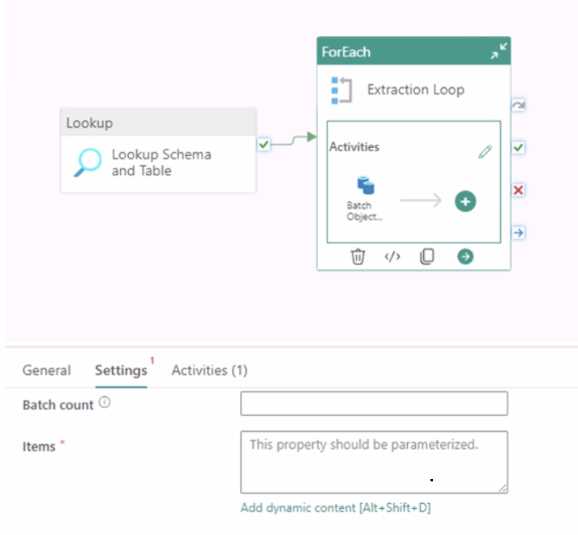

HOTSPOT You are building a data orchestration pattern by using a Fabric data pipeline named Dynamic Data Copy as shown in the exhibit. (Click the Exhibit tab.)

Lookup Schema and Table, output.value

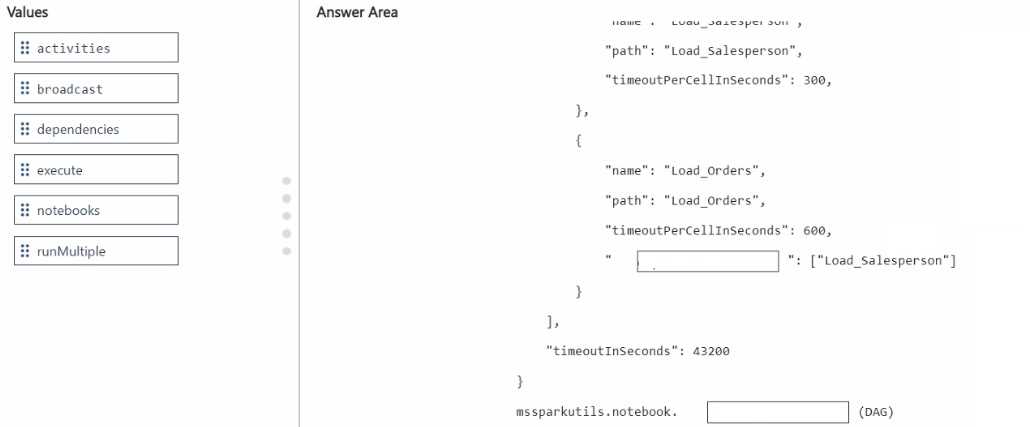

DRAG DROP You have two Fabric notebooks named Load_Salesperson and Load_Orders that read data from Parquet files in a lakehouse. Load_Salesperson writes to a Delta table named dim_salesperson. Load.Orders writes to a Delta table named fact_orders and is dependent on the successful execution of Load_Salesperson. You need to implement a pattern to dynamically execute Load_Salesperson and Load_Orders in the appropriate order by using a notebook. How should you complete the code? To answer, drag the appropriate values the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

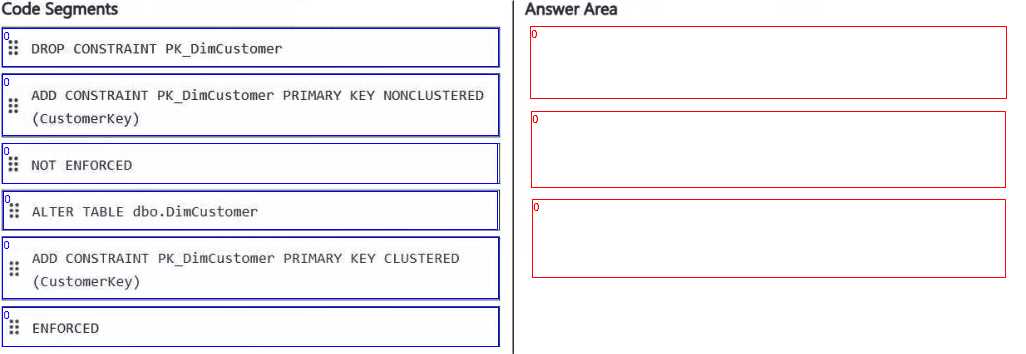

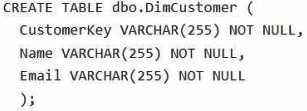

DRAG DROP You have a Fabric . In Warehouse1, you create a table named DimCustomer by running the following statement.

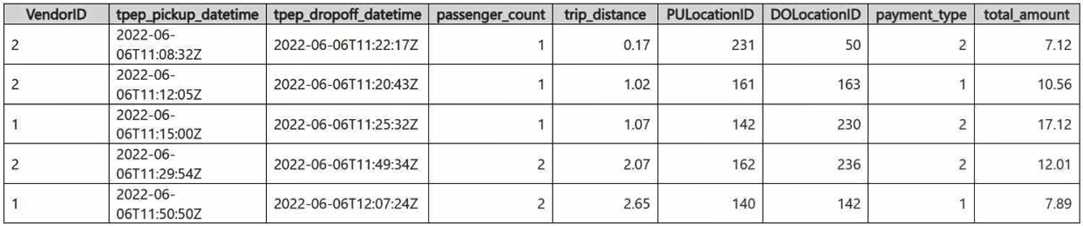

DRAG DROP You have a Fabric eventhouse that contains a KQL database. The database contains a table named TaxiData. The following is a sample of the data in TaxiData.

Kinda confused here, but I think

RunningTotalAmount = Row_cumsum

FirstPickupDateTime = Row_window_session

I picked window_session for the first pickup since it's about finding the start of a session (in this case, each hour by payment_type), and cumsum to get a running total by VendorID. Not 100% though, these KQL row functions always trip me up. Someone confirm?