James, G., Witten, D., Hastie, T., & Tibshirani, R. (2013). An Introduction to Statistical Learning: with Applications in R. Springer.

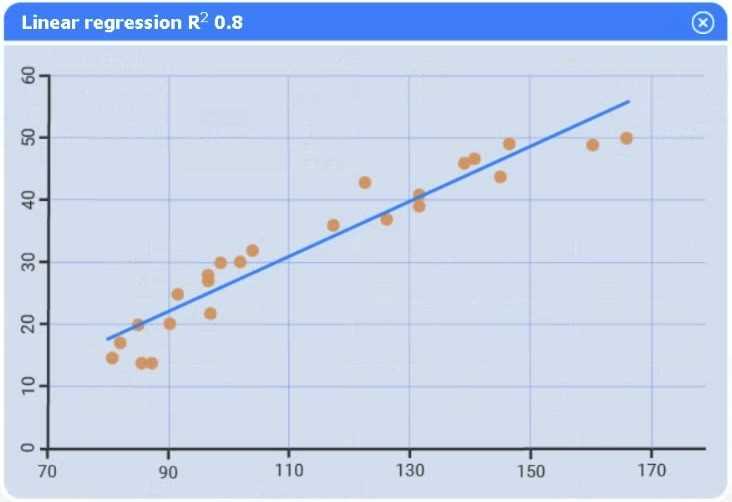

Reference: Chapter 3, "Linear Regression," Section 3.1.3, "Assessing the Accuracy of the Model."

Detail: This section explains the R-squared (R²) statistic, or coefficient of determination, as a key measure of the linear relationship between the response and predictors. It is defined as the "proportion of variance explained" and ranges from 0 to 1, with higher values indicating a better fit.

DOI: https://doi.org/10.1007/978-1-4614-7138-7_3

Draper, N. R., & Smith, H. (1998). Applied Regression Analysis (3rd ed.). Wiley.

Reference: Chapter 2, "Checking the Regression Model," Section 2.10, "The Coefficient of Determination, R²."

Detail: This section formally defines R² and discusses its use in "evaluating the quality of the fit." It establishes the principle that among several candidate models, the one with the higher R² (adjusted for the number of predictors) is generally preferred.

DOI: https://doi.org/10.1002/9781118625590

Hastie, T., Tibshirani, R., & Friedman, J. (2009). The Elements of Statistical Learning: Data Mining, Inference, and Prediction (2nd ed.). Springer.

Reference: Chapter 3, "Linear Methods for Regression," Section 3.2, "Linear Regression Models and Least Squares."

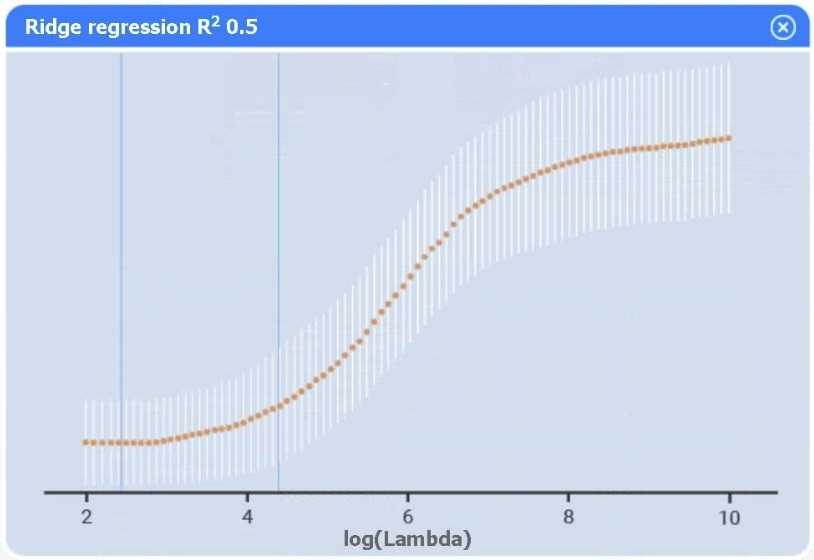

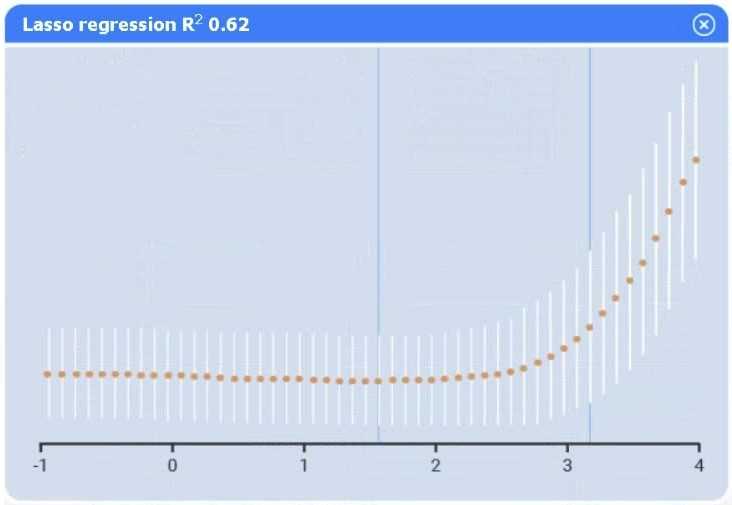

Detail: This foundational text discusses model assessment and selection. It contrasts simple linear regression with more complex methods like Ridge (Section 3.4.3) and Lasso (Section 3.4.2), noting that while these methods are useful for high-dimensional data or multicollinearity, the base R² remains a primary metric for comparing overall model fit.

DOI: https://doi.org/10.1007/978-0-387-84858-7