1. Databricks Official Documentation

"ML end-to-end example": This tutorial demonstrates the correct workflow. A Pipeline containing feature engineering stages is created. The documentation shows the pipeline being fitted only on the training data (pipelinemodel = pipeline.fit(traindf))

and then this fitted model is used to transform the test data (preddf = pipelinemodel.transform(testdf)). This directly supports the methodology described in the correct answer. (See: Databricks Documentation -> Machine Learning -> Tutorials -> ML end-to-end example -> Section: "Create a machine learning model").

2. Apache Spark Official Documentation

"ML Pipelines": The documentation explains that when a Pipeline.fit() method is called

it calls fit() on each Estimator stage (like StandardScaler) in sequence. The resulting Transformer becomes part of the PipelineModel. This PipelineModel can then be used to transform() new data. This confirms that the statistics for scaling are learned only from the data passed to fit()

which should be the training set. (See: Apache Spark 3.5.0 Documentation -> MLlib: Machine Learning Library -> ML Pipelines -> Section: "How it works").

3. Hastie

T.

Tibshirani

R.

& Friedman

J. (2009). The Elements of Statistical Learning. Springer. In Chapter 7

Section 10.2

"The Wrong and Right Way to Do Cross-validation

" the authors explicitly warn against this error. They state

"The test data should be strictly held out from all aspects of the model fitting

including feature scaling. Any preprocessing steps that use data

such as computing means and variances for standardization

must be learned from the training data only and then applied to the test data." This foundational principle of machine learning directly applies to a train-test split.

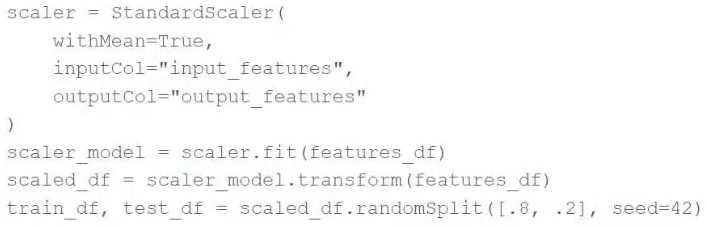

Upon code review, a colleague expressed concern with the features being standardized prior to

splitting the data into a training set and a test set.

Which of the following changes can the data scientist make to address the concern?

Upon code review, a colleague expressed concern with the features being standardized prior to

splitting the data into a training set and a test set.

Which of the following changes can the data scientist make to address the concern? Upon code review, a colleague expressed concern with the features being standardized prior to

splitting the data into a training set and a test set.

Which of the following changes can the data scientist make to address the concern?

Upon code review, a colleague expressed concern with the features being standardized prior to

splitting the data into a training set and a test set.

Which of the following changes can the data scientist make to address the concern?