Q: 11

17 of 55.

A data engineer has noticed that upgrading the Spark version in their applications from Spark 3.0 to

Spark 3.5 has improved the runtime of some scheduled Spark applications.

Looking further, the data engineer realizes that Adaptive Query Execution (AQE) is now enabled.

Which operation should AQE be implementing to automatically improve the Spark application

performance?

Options

Discussion

No comments yet. Be the first to comment.

Be respectful. No spam.

Q: 12

11 of 55.

Which Spark configuration controls the number of tasks that can run in parallel on an executor?

Options

Discussion

No comments yet. Be the first to comment.

Be respectful. No spam.

Q: 13

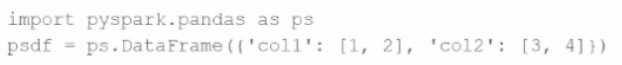

Given the code fragment:

import pyspark.pandas as ps

psdf = ps.DataFrame({'col1': [1, 2], 'col2': [3, 4]})

Which method is used to convert a Pandas API on Spark DataFrame (pyspark.pandas.DataFrame) into

a standard PySpark DataFrame (pyspark.sql.DataFrame)?

import pyspark.pandas as ps

psdf = ps.DataFrame({'col1': [1, 2], 'col2': [3, 4]})

Which method is used to convert a Pandas API on Spark DataFrame (pyspark.pandas.DataFrame) into

a standard PySpark DataFrame (pyspark.sql.DataFrame)?

import pyspark.pandas as ps

psdf = ps.DataFrame({'col1': [1, 2], 'col2': [3, 4]})

Which method is used to convert a Pandas API on Spark DataFrame (pyspark.pandas.DataFrame) into

a standard PySpark DataFrame (pyspark.sql.DataFrame)?

import pyspark.pandas as ps

psdf = ps.DataFrame({'col1': [1, 2], 'col2': [3, 4]})

Which method is used to convert a Pandas API on Spark DataFrame (pyspark.pandas.DataFrame) into

a standard PySpark DataFrame (pyspark.sql.DataFrame)?Options

Discussion

No comments yet. Be the first to comment.

Be respectful. No spam.

Q: 14

What is the risk associated with this operation when converting a large Pandas API on Spark

DataFrame back to a Pandas DataFrame?

Options

Discussion

No comments yet. Be the first to comment.

Be respectful. No spam.

Q: 15

A developer notices that all the post-shuffle partitions in a dataset are smaller than the value set for

spark.sql.adaptive.maxShuffledHashJoinLocalMapThreshold.

Which type of join will Adaptive Query Execution (AQE) choose in this case?

Options

Discussion

No comments yet. Be the first to comment.

Be respectful. No spam.

Q: 16

What is the benefit of using Pandas on Spark for data transformations?

Options:

Options

Discussion

No comments yet. Be the first to comment.

Be respectful. No spam.

Q: 17

A data engineer wants to write a Spark job that creates a new managed table. If the table already

exists, the job should fail and not modify anything.

Which save mode and method should be used?

Options

Discussion

No comments yet. Be the first to comment.

Be respectful. No spam.

Q: 18

10 of 55.

What is the benefit of using Pandas API on Spark for data transformations?

Options

Discussion

No comments yet. Be the first to comment.

Be respectful. No spam.

Q: 19

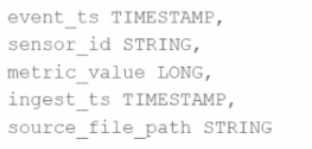

Given the schema:

event_ts TIMESTAMP,

sensor_id STRING,

metric_value LONG,

ingest_ts TIMESTAMP,

source_file_path STRING

The goal is to deduplicate based on: event_ts, sensor_id, and metric_value.

Options:

event_ts TIMESTAMP,

sensor_id STRING,

metric_value LONG,

ingest_ts TIMESTAMP,

source_file_path STRING

The goal is to deduplicate based on: event_ts, sensor_id, and metric_value.

Options:

event_ts TIMESTAMP,

sensor_id STRING,

metric_value LONG,

ingest_ts TIMESTAMP,

source_file_path STRING

The goal is to deduplicate based on: event_ts, sensor_id, and metric_value.

Options:

event_ts TIMESTAMP,

sensor_id STRING,

metric_value LONG,

ingest_ts TIMESTAMP,

source_file_path STRING

The goal is to deduplicate based on: event_ts, sensor_id, and metric_value.

Options:Options

Discussion

No comments yet. Be the first to comment.

Be respectful. No spam.

Question 11 of 20 · Page 2 / 2