Databricks DATABRICKS CERTIFIED ASSOCIAT…

Q: 1

A Spark application is experiencing performance issues in client mode because the driver is resource-

constrained.

How should this issue be resolved?

Options

Q: 2

How can a Spark developer ensure optimal resource utilization when running Spark jobs in Local

Mode for testing?

Options:

Options

Q: 3

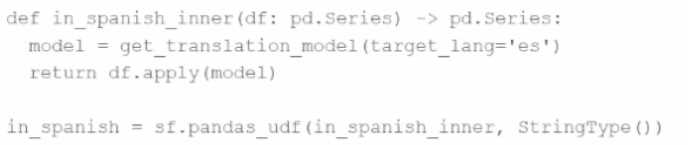

An MLOps engineer is building a Pandas UDF that applies a language model that translates English

strings into Spanish. The initial code is loading the model on every call to the UDF, which is hurting

the performance of the data pipeline.

The initial code is:

def in_spanish_inner(df: pd.Series) -> pd.Series:

model = get_translation_model(target_lang='es')

return df.apply(model)

in_spanish = sf.pandas_udf(in_spanish_inner, StringType())

How can the MLOps engineer change this code to reduce how many times the language model is

loaded?

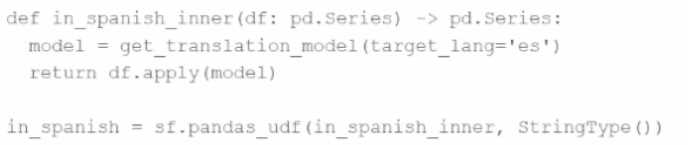

def in_spanish_inner(df: pd.Series) -> pd.Series:

model = get_translation_model(target_lang='es')

return df.apply(model)

in_spanish = sf.pandas_udf(in_spanish_inner, StringType())

How can the MLOps engineer change this code to reduce how many times the language model is

loaded?

def in_spanish_inner(df: pd.Series) -> pd.Series:

model = get_translation_model(target_lang='es')

return df.apply(model)

in_spanish = sf.pandas_udf(in_spanish_inner, StringType())

How can the MLOps engineer change this code to reduce how many times the language model is

loaded?

def in_spanish_inner(df: pd.Series) -> pd.Series:

model = get_translation_model(target_lang='es')

return df.apply(model)

in_spanish = sf.pandas_udf(in_spanish_inner, StringType())

How can the MLOps engineer change this code to reduce how many times the language model is

loaded?Options

Q: 4

A developer wants to test Spark Connect with an existing Spark application.

What are the two alternative ways the developer can start a local Spark Connect server without

changing their existing application code? (Choose 2 answers)

Options

Q: 5

A data scientist has identified that some records in the user profile table contain null values in any of

the fields, and such records should be removed from the dataset before processing. The schema

includes fields like user_id, username, date_of_birth, created_ts, etc.

The schema of the user profile table looks like this:

Which block of Spark code can be used to achieve this requirement?

Options:

Which block of Spark code can be used to achieve this requirement?

Options:

Which block of Spark code can be used to achieve this requirement?

Options:

Which block of Spark code can be used to achieve this requirement?

Options:Options

Q: 6

16 of 55.

A data engineer is reviewing a Spark application that applies several transformations to a DataFrame

but notices that the job does not start executing immediately.

Which two characteristics of Apache Spark's execution model explain this behavior? (Choose 2

answers)

Options

Q: 7

5 of 55.

What is the relationship between jobs, stages, and tasks during execution in Apache Spark?

Options

Q: 8

Which command overwrites an existing JSON file when writing a DataFrame?

Options

Q: 9

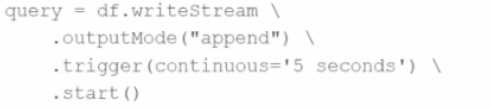

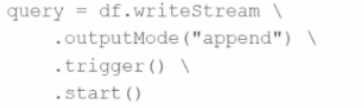

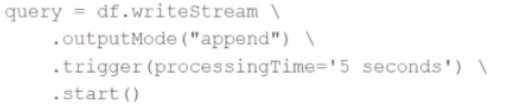

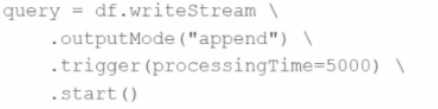

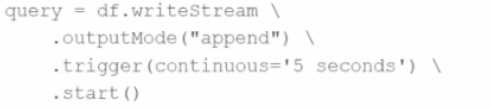

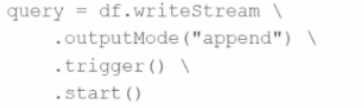

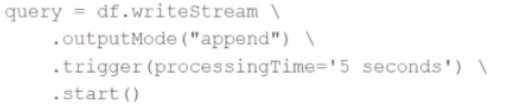

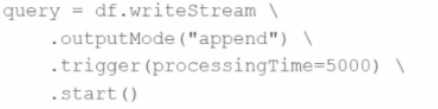

A data engineer is working on a real-time analytics pipeline using Apache Spark Structured

Streaming. The engineer wants to process incoming data and ensure that triggers control when the

query is executed. The system needs to process data in micro-batches with a fixed interval of 5

seconds.

Which code snippet the data engineer could use to fulfil this requirement?

A)

B)

B)

C)

C)

D)

D)

Options:

Options:

B)

B)

C)

C)

D)

D)

Options:

Options:Options

Q: 10

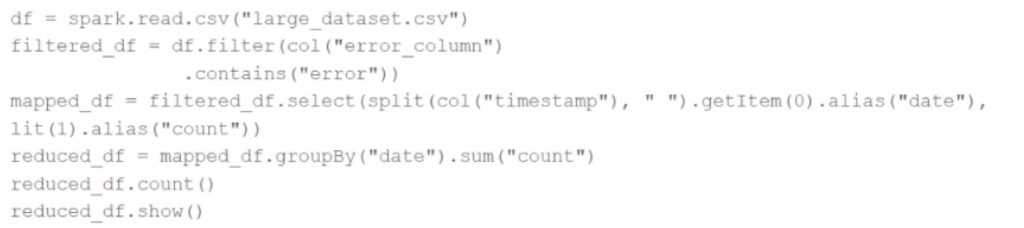

Given the code:

df = spark.read.csv("large_dataset.csv")

filtered_df = df.filter(col("error_column").contains("error"))

mapped_df = filtered_df.select(split(col("timestamp"), " ").getItem(0).alias("date"),

lit(1).alias("count"))

reduced_df = mapped_df.groupBy("date").sum("count")

reduced_df.count()

reduced_df.show()

At which point will Spark actually begin processing the data?

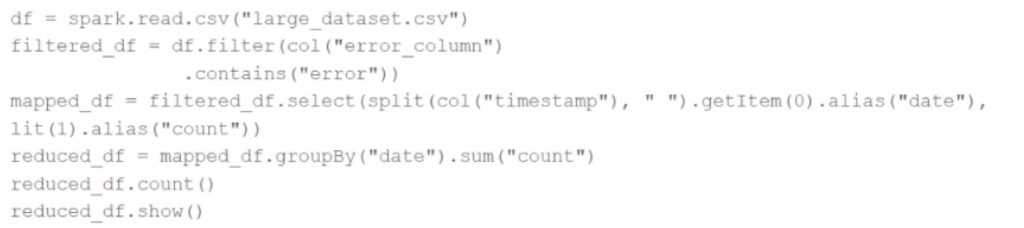

df = spark.read.csv("large_dataset.csv")

filtered_df = df.filter(col("error_column").contains("error"))

mapped_df = filtered_df.select(split(col("timestamp"), " ").getItem(0).alias("date"),

lit(1).alias("count"))

reduced_df = mapped_df.groupBy("date").sum("count")

reduced_df.count()

reduced_df.show()

At which point will Spark actually begin processing the data?

df = spark.read.csv("large_dataset.csv")

filtered_df = df.filter(col("error_column").contains("error"))

mapped_df = filtered_df.select(split(col("timestamp"), " ").getItem(0).alias("date"),

lit(1).alias("count"))

reduced_df = mapped_df.groupBy("date").sum("count")

reduced_df.count()

reduced_df.show()

At which point will Spark actually begin processing the data?

df = spark.read.csv("large_dataset.csv")

filtered_df = df.filter(col("error_column").contains("error"))

mapped_df = filtered_df.select(split(col("timestamp"), " ").getItem(0).alias("date"),

lit(1).alias("count"))

reduced_df = mapped_df.groupBy("date").sum("count")

reduced_df.count()

reduced_df.show()

At which point will Spark actually begin processing the data?Options

Question 1 of 10