AWS SAP-C02 Exam Questions 2025

Our SAP-C02 Exam Questions bring you the most recent and reliable questions for the AWS Certified Solutions Architect – Professional certification, carefully checked by subject matter experts. Each dump includes verified answers with detailed explanations, clarifications on wrong options, and trusted references. With our online exam simulator and free demo questions, Cert Empire makes your SAP-C02 exam preparation smarter, faster, and more effective.

All the questions are reviewed by Vanesa Martin who is a SAP-C02 certified professional working with Cert Empire.

Exam Questions

SAP-C02.pdf

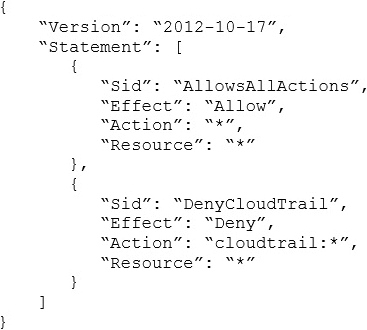

A company with several AWS accounts is using AWS Organizations and service control policies (SCPs). An Administrator created the following SCP and has attached it to an organizational unit (OU) that contains AWS account 1111-1111-1111:

Developers working in account 1111-1111-1111 complain that they cannot create Amazon S3 buckets. How should the Administrator address this problem?

About SAP-C02 Exam

About the AWS Certified Solutions Architect – Professional (SAP-C02) Exam

The AWS Certified Solutions Architect – Professional (SAP-C02) exam is an advanced-level certification offered by Amazon Web Services (AWS). It validates a candidate’s ability to design, deploy, and evaluate applications on AWS architecture while ensuring high availability, security, and cost optimization.

As cloud adoption continues to rise, AWS-certified professionals are in high demand. Earning the SAP-C02 certification demonstrates expertise in designing complex AWS solutions that align with business needs. This certification is ideal for experienced cloud professionals seeking to advance their careers in AWS architecture and cloud solutions.

Why Choose the AWS SAP-C02 Certification?

The AWS SAP-C02 certification offers multiple career advantages:

- Industry Recognition – A globally recognized credential proving expertise in AWS cloud architecture.

- Career Advancement – Opens doors to roles such as AWS Solutions Architect, Cloud Consultant, and Enterprise Architect.

- Hands-On Expertise – Demonstrates advanced skills in multi-tier applications, hybrid cloud strategies, and AWS cost management.

- Higher Salary Potential – AWS-certified professionals earn an average salary of $140,000+ annually.

- Growing Demand for AWS Professionals – As businesses migrate to AWS cloud infrastructure, the need for certified solutions architects continues to grow.

Who Should Take the SAP-C02 Certification?

The SAP-C02 exam is intended for:

- Experienced Solutions Architects and Cloud Engineers managing AWS workloads.

- IT Professionals and Consultants working with cloud migrations and AWS deployments.

- DevOps and Security Engineers seeking expertise in AWS automation, security, and networking.

Candidates should have:

- At least two years of hands-on experience with AWS.

- In-depth knowledge of AWS networking, storage, and security solutions.

- Experience designing fault-tolerant and scalable AWS architectures.

SAP-C02 Exam Format and Structure

Understanding the SAP-C02 exam format is essential for effective preparation. Here’s what to expect:

- Number of Questions: 75

- Question Types: Multiple-choice, multiple-response, and scenario-based questions

- Exam Duration: 180 minutes

- Passing Score: 750 out of 1000

- Languages Available: English, Japanese, Korean, Simplified Chinese

- Exam Fee: $300

Key Topics Covered in the SAP-C02 Exam

The AWS Certified Solutions Architect – Professional (SAP-C02) certification ensures candidates have expertise in AWS cloud architecture, security, and cost optimization. Below is a breakdown of the key domains:

1. Design Solutions for Organizational Complexity (26%)

- Hybrid cloud architecture and multi-account strategies

- AWS Organizations and Service Control Policies (SCPs)

- Designing governance, security, and compliance policies

2. Design for New Solutions (29%)

- Implementing scalable and secure AWS applications

- Disaster recovery (DR) and business continuity planning

- Selecting appropriate AWS services for application design

3. Continuous Improvement for Existing Solutions (25%)

- Cost optimization strategies (AWS Cost Explorer, AWS Budgets)

- Performance tuning and fault tolerance

- AWS Well-Architected Framework and the best practices

4. Accelerate Workload Migration and Modernization (20%)

- Migration strategies (Re-host, Re-platform, Re-architect)

- AWS Database Migration Service (DMS) and Schema Conversion Tool (SCT)

- Modernizing workloads using AWS Lambda, Fargate, and serverless solutions

About SAP-C02 Exam Questions

SAP-C02 Exam Questions by Cert Empire

If your goal is to become a cloud architect who designs scalable, secure, and cost-efficient systems on AWS, then the AWS Certified Solutions Architect – Professional (SAP-C02) certification is your destination. It’s one of the most respected and challenging credentials in cloud computing—proving your ability to architect and deploy dynamic applications on AWS infrastructure.

But with complexity comes pressure. The SAP-C02 exam covers deep architectural concepts, from hybrid environments and cost optimization to high availability and migration strategies. That’s why Cert Empire’s SAP-C02 exam questions are your smartest preparation ally, turning intricate cloud architecture into clear, structured, and understandable lessons.

With the right study materials and practice tests, you can transform uncertainty into mastery.

Why Practice Questions Are the Key to SAP-C02 Success

The SAP-C02 by AWS isn’t an exam you can “wing.” It challenges your real-world problem-solving abilities. AWS expects you to evaluate business requirements, design fault-tolerant architectures, and choose the most cost-effective services—fast.

That’s why Cert Empire’s practice questions go beyond memorization. Each scenario pushes you to think like an AWS Solutions Architect—making decisions that balance performance, cost, security, and reliability.

What sets Cert Empire apart is the why. Every question comes with a clear explanation, helping you understand AWS logic, architectural principles, and design trade-offs. This makes your exam prep material more than a question bank—it’s a learning experience that builds intuition and depth.

Study Anytime, Anywhere – Your Cloud, Your Schedule

Balancing study time with a busy life can be tough. But with Cert Empire’s SAP-C02 PDF exam questions, you can take control of your learning.

The study resources are available in portable, printable formats so you can study from your phone, laptop, or even a printed copy during travel. Whether you prefer quick refreshers or long focused sessions, your exam prep material stays ready whenever you are.

You set the pace, Cert Empire provides the path.

From Exam Pressure to Architectural Confidence

The SAP-C02 exam can feel intimidating, it’s long, scenario-based, and filled with complex case studies. But the best way to overcome that anxiety is by practicing with realistic simulations.

Cert Empire’s practice tests replicate the real AWS exam interface, structure, and time constraints. You’ll get used to reading lengthy questions, analyzing requirements, and selecting the best AWS solutions confidently.

By the time exam day arrives, you’ll not only understand the content, you’ll think like a professional architect.

If you cannot find your relevant exam questions PDF, request a dump here.

What Cert Empire Offers for SAP-C02 Candidates

1. Realistic Exam Simulation

Experience the feel of the real AWS SAP-C02 exam with practice tests that mirror its tone, style, and complexity.

2. Expert-Verified Study Material

All study resources are created and reviewed by AWS-certified architects to ensure complete accuracy and coverage.

3. Portable and Printable PDF Exam Questions

Study at your convenience with exam prep materials that adapt to your lifestyle.

4. Free Practice Questions

Try a sample of practice questions before purchasing and see how Cert Empire elevates your preparation.

5. 24/7 Dedicated Support

Whether you have a question or need guidance, Cert Empire’s customer support is there for you anytime.

6. Transparent Refund Policy

Your success matters most, Cert Empire’s fair refund policy ensures your satisfaction and peace of mind.

Building a Strong Study Routine for SAP-C02

This is an advanced certification, and the best results come from structured, focused study. Here’s a proven approach:

- Understand the Exam Domains: Focus on design for organizational complexity, cost control, security, and resiliency.

- Start with Core AWS Concepts: Review VPCs, IAM, storage, networking, and automation tools.

- Use Cert Empire’s Study Resources: Their study materials simplify complex architecture case studies.

- Practice Daily: Regularly use practice questions to apply knowledge in real-world scenarios.

- Take Full-Length Practice Tests: Simulate the real exam to build timing and confidence.

- Review and Reflect: Learn from every mistake—each one teaches you how AWS architects think.

With consistency and the right prep, you’ll not just pass, you’ll excel.

Beyond SAP-C02 – Advancing Your Cloud Career

Once you earn your AWS Certified Solutions Architect – Professional credential, you stand among elite cloud professionals. It opens doors to roles such as:

- Senior Cloud Architect

- Cloud Solutions Manager

- Enterprise Cloud Strategist

- Technical Consultant

From here, you can expand into specialty certifications like Security, Machine Learning, or Advanced Networking. And for every stage of your journey, Cert Empire has the exam prep materials and practice tests to support you.

Final Thoughts

The SAP-C02 certification proves you’re not just familiar with the cloud, you can architect it. It’s a badge of deep technical knowledge and strategic thinking that sets you apart in a competitive field.

With Cert Empire’s SAP-C02 exam questions, you gain more than preparation, you gain perspective. Their study resources, practice questions, and exam simulations give you everything you need to master the exam and build a cloud career with confidence.

Your future as a certified AWS architect starts with one smart move, preparing with Cert Empire.

Start your SAP-C02 exam prep today: https://certempire.com

12 reviews for AWS SAP-C02 Exam Questions 2025

One thought on "AWS SAP-C02 Exam Questions 2025"

-

Anyone tried Cert Empire for the SAP-C02 exam? How did this exam dump material help with your preparation?

Trump (verified owner) –

My experience was great with this site as it has 100% real questions available for practice which made me pass my AWS SAP-C02 by 925/1000.

Aaron cole (verified owner) –

Luckily I discovered Cert Empire ten days before the exam and I managed to pass it with 943/1000. 90% of the questions were in the exam. It’s worth it.

Cleo Daphne (verified owner) –

Delighted to share that I passed the SAP-C02 exam with flying colors, thanks to Cert Empire! Highly recommend!

Lark Simmon (verified owner) –

Passed my Exam with the help of Cert Empire Practice Questions.

Kelly Brook (verified owner) –

I am very happy as I just got my SAP-C02 exam result today and I passed with a great score. All the credit goes to this Cert Empire site as it has 100% real questions

Aaron (verified owner) –

The explanations in Cert Empire’s dumps were so clear. I finally understood the tricky parts of the SAP-C02. Thanks to the maker of these, honestly

Jeannette Horton (verified owner) –

I felt like I had AWS secrets in my back pocket after getting these dumps. SAP-C02? This resource makes SAP-C02 “too easy” for me thanks Cert Empire for your support!

zakroli (verified owner) –

Quality dumps from Quality side……Cert Empire

Jayden (verified owner) –

My decision to buy Cert Empire dumps was one of my best decisions. The reason is that the content is comprehensive and aligned with the latest exam formats.

Boone (verified owner) –

Today, I’m an AWS Certified Solutions Architect. I think Cert Empire played a vital role in helping me pass my exam, their dumps made my preparation easier, and I finally succeeded.

droversointeru (verified owner) –

The subsequent time I learn a weblog, I hope that it doesnt disappoint me as much as this one. I mean, I know it was my choice to read, however I truly thought youd have one thing attention-grabbing to say. All I hear is a bunch of whining about one thing that you may repair when you werent too busy looking for attention.

Levi Perry (verified owner) –

The AWS SAP-C02 file balanced detail and brevity perfectly. It never felt overwhelming, yet covered every necessary topic. Cert Empire ensured that the content stayed complete but concise.