What is the Microsoft AZ-305 exam, and what will you learn from it?

The Microsoft Azure Solutions Architect Expert (AZ-305) exam is designed for professionals who want to validate their expertise in designing and implementing Azure solutions.

It is ideal for solutions architects, cloud engineers, and senior IT professionals responsible for translating business requirements into scalable, secure, and cost-effective cloud solutions.

By preparing for and passing the AZ-305 exam, you will gain expertise in designing infrastructure, identity, security, data, and application solutions on Azure, as well as optimizing networking, cost, and monitoring.

Practicing with the best AZ-305 exam questions at Cert Empire ensures you are prepared for real-world scenario-based questions and practical implementation challenges.

Exam Snapshot

|

Exam Detail

|

Description

|

|

Exam Code

|

AZ-305

|

|

Exam Name

|

Designing Microsoft Azure Infrastructure Solutions

|

|

Vendor

|

Microsoft

|

|

Version / Year

|

2025

|

|

Average Salary

|

$120,000 – $150,000 annually (varies by region and experience)

|

|

Cost

|

$165 USD

|

|

Exam Format

|

Multiple-choice, scenario-based, and practical design questions

|

|

Duration (minutes)

|

150 minutes

|

|

Delivery Method

|

Online proctored or testing center

|

|

Languages

|

English, plus additional languages

|

|

Scoring Method

|

Weighted scoring (0–1000 points)

|

|

Passing Score

|

700/1000

|

|

Prerequisites

|

Recommended: AZ-900, AZ-104, and AZ-204

|

|

Retake Policy

|

Wait 24 hours after first attempt; limited attempts in a year

|

|

Target Audience

|

Solutions architects, cloud engineers, senior IT professionals

|

|

Certification Validity

|

1 year

|

|

Release Date

|

2021

|

Prerequisites before taking the AZ-305 exam

Candidates should ideally have:

- Experience in designing and implementing cloud solutions using Azure.

- Understanding of identity, networking, storage, compute, and security architectures in Azure.

- Familiarity with governance, monitoring, and cost optimization strategies.

- Completion of AZ-900, AZ-104, and AZ-204 is highly recommended for foundational and intermediate Azure knowledge.

Main objectives and domains you will study for AZ-305

The AZ-305 exam focuses on designing solutions for complex cloud architectures. The main domains include:

- Design Identity, Governance, and Monitoring Solutions (25–30%) – Authentication, RBAC, policies, monitoring, and alerting.

- Design Data Storage Solutions (15–20%) – Relational and non-relational storage, data integration, and backup strategies.

- Design Business Continuity Solutions (10–15%) – Disaster recovery, high availability, and backup.

- Design Infrastructure Solutions (30–35%) – Compute, networking, and application architecture, including scaling and security.

- Design Application Architecture (10–15%) – Microservices, serverless applications, and integration patterns.

Topics to cover in each AZ-305 exam domain

Design Identity, Governance, and Monitoring Solutions:

- Implement Azure AD, conditional access, and RBAC

- Define governance policies and resource locks

- Design monitoring, logging, and alerting solutions

Design Data Storage Solutions:

- Select appropriate data storage technologies (SQL, Cosmos DB, Blob Storage)

- Implement data retention, backup, and recovery strategies

- Optimize storage for performance and cost

Design Business Continuity Solutions:

- Implement high availability and failover strategies

- Configure disaster recovery plans and multi-region deployments

- Ensure resiliency for mission-critical workloads

Design Infrastructure Solutions:

- Design virtual networks, subnets, and hybrid connectivity

- Implement compute scaling solutions (VMs, AKS, App Services)

- Apply security controls, encryption, and network segmentation

Design Application Architecture:

- Design microservices and serverless solutions

- Integrate APIs, messaging, and event-driven architectures

- Optimize applications for performance, scalability, and cost

Changes in the latest version of AZ-305

The latest AZ-305 exam emphasizes modern cloud architectures, hybrid connectivity, and advanced governance. Microsoft updated content to include Azure Kubernetes Service (AKS), serverless solutions, advanced security, and cost optimization strategies. Candidates are expected to design solutions reflecting enterprise-level requirements.

Register and schedule your AZ-305 exam

You can register through the Microsoft Certification portal. Exams are available online with a proctor or at authorized testing centers.

AZ-305 exam cost, and can you get any discounts?

The exam costs $165 USD. Discounts may be available for students, educators, or Microsoft Learn partners. Bundled study resources and practice questions from Cert Empire can help you save costs and improve exam readiness.

Exam policies you should know before taking AZ-305

- Present a valid government-issued ID.

- Arrive or log in 30 minutes early for check-in.

- Electronic devices, notes, and calculators are not allowed.

- Review retake policies if the first attempt is unsuccessful.

What can you expect on your AZ-305 exam day?

- Multiple-choice and scenario-based questions focusing on designing enterprise-grade Azure solutions.

- Practical design scenarios covering compute, networking, data, security, and monitoring.

- 150 minutes to complete the exam.

Practicing with the best AZ-305 exam questions at Cert Empire ensures you are ready for real-world scenario-based questions

Plan your AZ-305 study schedule effectively with 5 Study Tips

Tip 1: Review the official AZ-305 exam blueprint in detail.

Tip 2: Create hands-on labs for compute, storage, networking, and security solutions.

Tip 3: Practice scenario-based questions using Cert Empire resources.

Tip 4: Participate in study groups or forums for design discussions.

Tip 5: Take timed mock exams to simulate real exam conditions and improve time management.

Best study resources you can use to prepare for AZ-305

- Microsoft Learn: AZ-305 learning paths and labs

- Azure free tier for hands-on design and testing

- Cert Empire’s curated AZ-305 practice questions

- Video tutorials from Microsoft and trusted online learning platforms

- Azure architecture and solution design forums

Career opportunities you can explore after earning AZ-305

- Azure Solutions Architect – designing enterprise-scale cloud solutions.

- Cloud Infrastructure Architect – planning hybrid and multi-cloud deployments.

- Cloud Consultant – advising organizations on Azure best practices.

- DevOps Architect – integrating infrastructure and application design with CI/CD pipelines.

AZ-305 certification demonstrates your ability to design scalable, secure, and highly available solutions in Azure, opening doors to senior cloud architecture roles.

Certifications to go for after completing AZ-305

- AZ-700: Azure Networking Engineer Expert

- AZ-500: Azure Security Engineer Associate

- DP-203: Azure Data Engineer Associate (for data-focused architectures)

These certifications build on AZ-305 skills and prepare professionals for advanced roles in Azure architecture, networking, and security.

How does AZ-305 compare to other beginner-level cloud certifications?

AZ-305 is an advanced-level, solution-architect-focused certification, unlike AZ-900 (fundamentals) or AZ-104 (administration). It is comparable to AWS Solutions Architect – Professional or Google Professional Cloud Architect, targeting professionals responsible for enterprise cloud solution design.

Practicing with the best AZ-305 exam questions at Cert Empire ensures you gain practical architecture experience, understand scenario-based questions, and confidently pass the exam while mastering real-world Azure solution design skills.

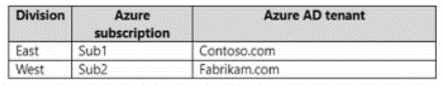

Sub1 contains an Azure App Service web app named App1. Appl uses Azure AD for single-tenant user

authentication. Users from contoso.com can authenticate to App1.

You need to recommend a solution to enable users in the fabrikam.com tenant to authenticate to

App1.

What should you recommend?

Sub1 contains an Azure App Service web app named App1. Appl uses Azure AD for single-tenant user

authentication. Users from contoso.com can authenticate to App1.

You need to recommend a solution to enable users in the fabrikam.com tenant to authenticate to

App1.

What should you recommend?

Elijah Arlo (verified owner) –

Cert Empire helped me ace my certification exam with ease.

Chris Domino (verified owner) –

Best for AZ 400 exam – much recommended!

srinivas.challagolla100 (verified owner) –

Cert Empire’s AZ-305 dumps are really helpful and well-organized — a great resource for exam prep!

Just a few minor updates could make them perfect: some explanations (Q12, 13, 15) and case study details (Q16, 18, 20, 21–29) seem to be missing. The file currently has 320 questions.

Overall, amazing effort by the Cert Empire team — keep up the great work!

jefferson.jrsa (verified owner) –

Melhor exame de AZ-305, tá aprovado.

Vijayendra Soni (verified owner) –

The AZ-305 content had a built-in search that worked really well. I could find any topic super quickly during revision. Cert Empire clearly paid attention to these little usability touches, which made studying much smoother and more organised overall.