Real Google Associate Cloud Engineer

Q: 1

You have a number of compute instances belonging to an unmanaged instances group. You need to

SSH to one of the Compute Engine instances to run an ad hoc script. You’ve already authenticated

gcloud, however, you don’t have an SSH key deployed yet. In the fewest steps possible, what’s the

easiest way to SSH to the instance?

Options

Q: 2

You are the project owner of a GCP project and want to delegate control to colleagues to manage

buckets and files in Cloud Storage. You want to follow Google-recommended practices. Which IAM

roles should you grant your colleagues?

Options

Q: 3

You want to configure an SSH connection to a single Compute Engine instance for users in the dev1

group. This instance is the only resource in this particular Google Cloud Platform project that the

dev1 users should be able to connect to. What should you do?

Options

Q: 4

You have a Dockerfile that you need to deploy on Kubernetes Engine. What should you do?

Options

Q: 5

You have an object in a Cloud Storage bucket that you want to share with an external company. The

object contains sensitive dat

a. You want access to the content to be removed after four hours. The external company does not

have a Google account to which you can grant specific user-based access privileges. You want to use

the most secure method that requires the fewest steps. What should you do?

Options

Q: 6

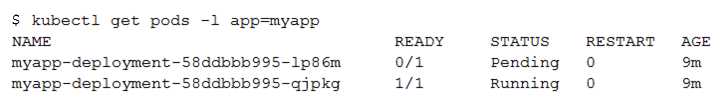

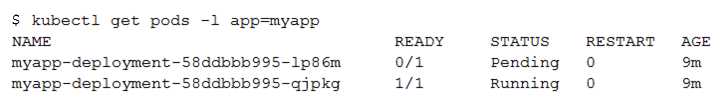

You create a Deployment with 2 replicas in a Google Kubernetes Engine cluster that has a single

preemptible node pool. After a few minutes, you use kubectl to examine the status of your Pod and

observe that one of them is still in Pending status:

What is the most likely cause?

What is the most likely cause?

What is the most likely cause?

What is the most likely cause?Options

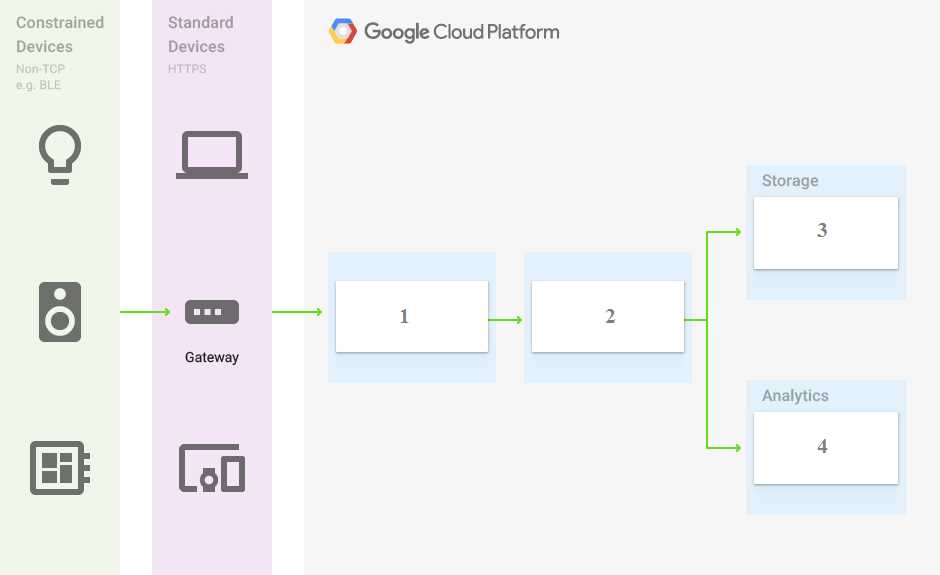

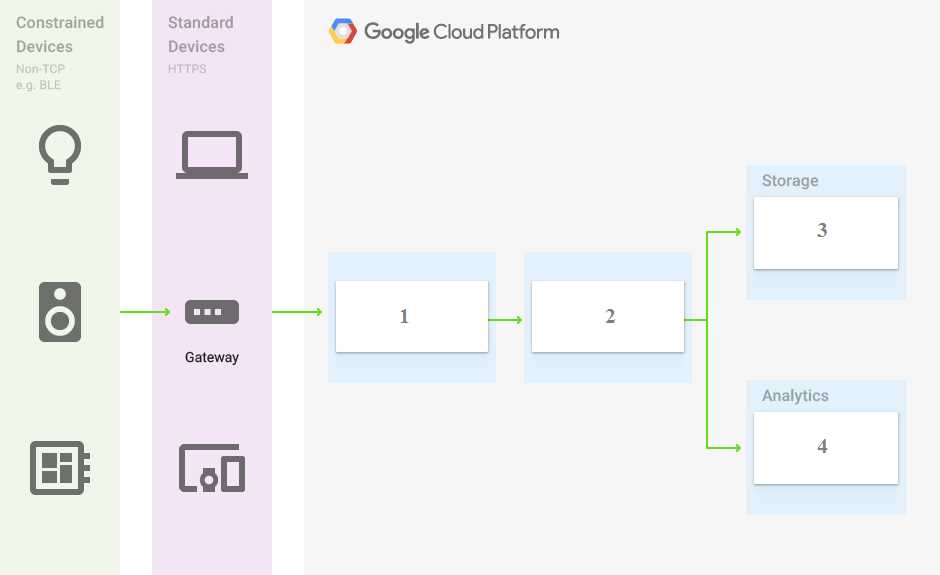

Q: 7

You are building a pipeline to process time-series dat

a. Which Google Cloud Platform services should you put in boxes 1,2,3, and 4?

Options

Q: 8

You have an application that uses Cloud Spanner as a backend database. The application has a very

predictable traffic pattern. You want to automatically scale up or down the number of Spanner nodes

depending on traffic. What should you do?

Options

Q: 9

You are using Deployment Manager to create a Google Kubernetes Engine cluster. Using the same

Deployment Manager deployment, you also want to create a DaemonSet in the kube-system

namespace of the cluster. You want a solution that uses the fewest possible services. What should

you do?

Options

Q: 10

You are running a data warehouse on BigQuery. A partner company is offering a recommendation

engine based on the data in your data warehouse. The partner company is also running their

application on Google Cloud. They manage the resources in their own project, but they need access

to the BigQuery dataset in your project. You want to provide the partner company with access to the

dataset What should you do?

Options

Question 1 of 10