AZ-305.pdf

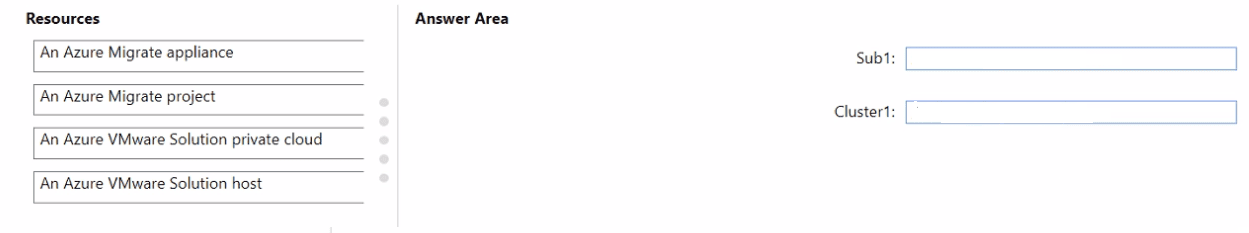

DRAG DROP You have an on-premises datacenter named Site1. Site1 contains a VMware vSphere cluster named Cluster1 that hosts 100 virtual machines. Cluster1 is managed by using VMware vCenter. You have an Azure subscription named Sub1. You plan to migrate the virtual machines from Cluster1 to Sub1. You need to identify which resources are required to run the virtual machines in Azure. The solution must minimize administrative effort. What should you configure? To answer, drag the appropriate resources to the correct targets. Each resource may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

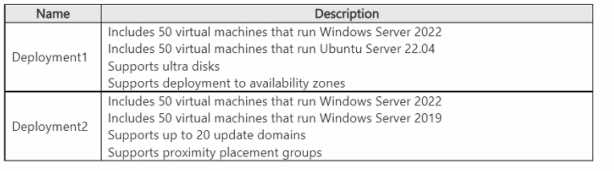

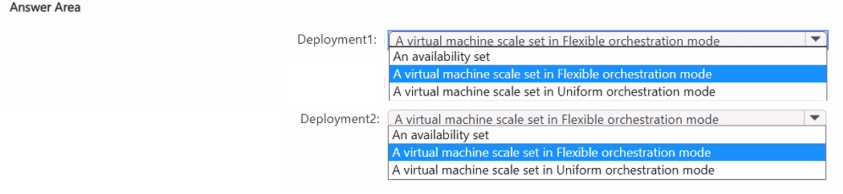

HOTSPOT You have an Azure subscription. You plan to deploy two 100-virtual machine deployments as shown in the following table.  You need to recommend a virtual machine grouping solution for the deployments. What should you include in the recommendation for each deployment? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

You need to recommend a virtual machine grouping solution for the deployments. What should you include in the recommendation for each deployment? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

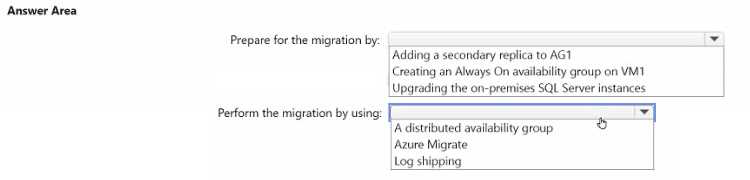

HOTSPOT You have two on-premises Microsoft SQL Server 2017 instances that host an Always On availability group named AG1. AG1 contains a single database named DB1. You have an Azure subscription that contains a virtual machine named VM1VM1 runs Linux and contains a SQL Server 2019 instance. You need to migrate DB1 to VMI. The solution must minimize downtime on DBI. What should you do? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

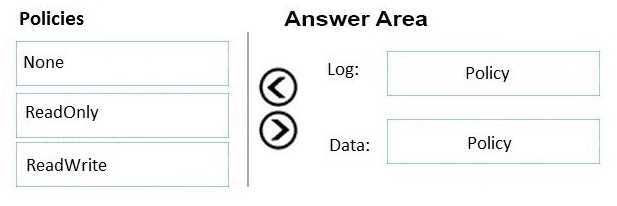

DRAG DROP You are designing a virtual machine that will run Microsoft SQL Server and will contain two data disks. The first data disk will store log files, and the second data disk will store dat a. Both disks are P40 managed disks. You need to recommend a caching policy for each disk. The policy must provide the best overall performance for the virtual machine. Which caching policy should you recommend for each disk? To answer, drag the appropriate policies to the correct disks. Each policy may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

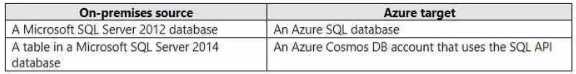

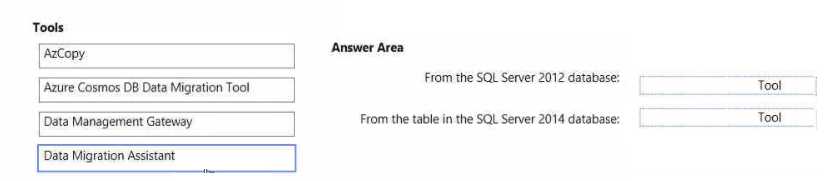

DRAG DROP You plan to import data from your on-premises environment to Azure. The data Is shown in the following table.  What should you recommend using to migrate the data? To answer, drag the appropriate tools to the correct data sources-Each tool may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

What should you recommend using to migrate the data? To answer, drag the appropriate tools to the correct data sources-Each tool may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

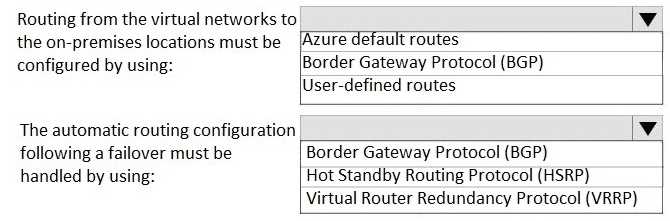

HOTSPOT Your company has two on-premises sites in New York and Los Angeles and Azure virtual networks in the East US Azure region and the West US Azure region. Each on-premises site has Azure ExpressRoute circuits to both regions. You need to recommend a solution that meets the following requirements: Outbound traffic to the Internet from workloads hosted on the virtual networks must be routed through the closest available on-premises site. If an on-premises site fails, traffic from the workloads on the virtual networks to the Internet must reroute automatically to the other site. What should you include in the recommendation? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.