Study Smarter for the Machine Learning Associate Exam with Our Free and Accurate Machine Learning Associate Exam Questions – Updated for 2026.

At Cert Empire, we are committed to providing the most reliable and up-to-date exam questions for students preparing for the Databricks Machine Learning Associate Exam. To help learners study more effectively, we’ve made sections of our Machine Learning Associate exam resources free for everyone. You can practice as much as you want with Free Machine Learning Associate Practice Test.

Databricks Machine Learning Associate

Q: 1

An organization is developing a feature repository and is electing to one-hot encode all categorical

feature variables. A data scientist suggests that the categorical feature variables should not be one-

hot encoded within the feature repository.

Which of the following explanations justifies this suggestion?

Options

Q: 2

A data scientist uses 3-fold cross-validation and the following hyperparameter grid when optimizing

model hyperparameters via grid search for a classification problem:

● Hyperparameter 1: [2, 5, 10]

● Hyperparameter 2: [50, 100]

Which of the following represents the number of machine learning models that can be trained in

parallel during this process?

Options

Q: 3

Which of the following tools can be used to distribute large-scale feature engineering without the

use of a UDF or pandas Function API for machine learning pipelines?

Options

Q: 4

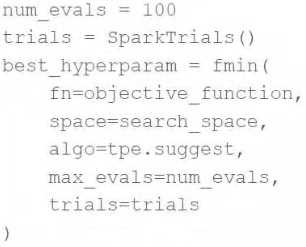

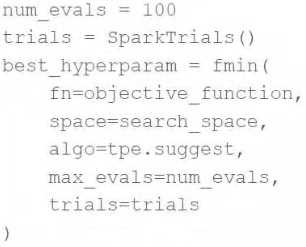

A data scientist wants to tune a set of hyperparameters for a machine learning model. They have

wrapped a Spark ML model in the objective function objective_function and they have defined the

search space search_space.

As a result, they have the following code block:

Which of the following changes do they need to make to the above code block in order to accomplish

the task?

Which of the following changes do they need to make to the above code block in order to accomplish

the task?

Which of the following changes do they need to make to the above code block in order to accomplish

the task?

Which of the following changes do they need to make to the above code block in order to accomplish

the task?Options

Q: 5

A data scientist wants to parallelize the training of trees in a gradient boosted tree to speed up the

training process. A colleague suggests that parallelizing a boosted tree algorithm can be difficult.

Which of the following describes why?

Options

Q: 6

A data scientist has written a data cleaning notebook that utilizes the pandas library, but their

colleague has suggested that they refactor their notebook to scale with big data.

Which of the following approaches can the data scientist take to spend the least amount of time

refactoring their notebook to scale with big data?

Options

Q: 7

A data scientist is wanting to explore summary statistics for Spark DataFrame spark_df. The data

scientist wants to see the count, mean, standard deviation, minimum, maximum, and interquartile

range (IQR) for each numerical feature.

Which of the following lines of code can the data scientist run to accomplish the task?

Options

Q: 8

A data scientist is developing a machine learning pipeline using AutoML on Databricks Machine

Learning.

Which of the following steps will the data scientist need to perform outside of their AutoML

experiment?

Options

Q: 9

The implementation of linear regression in Spark ML first attempts to solve the linear regression

problem using matrix decomposition, but this method does not scale well to large datasets with a

large number of variables.

Which of the following approaches does Spark ML use to distribute the training of a linear regression

model for large data?

Options

Q: 10

Which of the following approaches can be used to view the notebook that was run to create an

MLflow run?

Options

Question 1 of 10