DP-203.pdf

Q: 1

You plan to ingest streaming social media data by using Azure Stream Analytics. The data will be

stored in files in Azure Data Lake Storage, and then consumed by using Azure Datiabricks and

PolyBase in Azure Synapse Analytics.

You need to recommend a Stream Analytics data output format to ensure that the queries from

Databricks and PolyBase against the files encounter the fewest possible errors. The solution must

ensure that the tiles can be queried quickly and that the data type information is retained.

What should you recommend?

Options

Q: 2

You have an Azure data factory that connects to a Microsoft Purview account. The data factory is

registered in Microsoft Purview.

You update a Data Factory pipeline.

You need to ensure that the updated lineage is available in Microsoft Purview.

What You have an Azure subscription that contains an Azure SQL database named DB1 and a storage

account named storage1. The storage1 account contains a file named File1.txt. File1.txt contains the

names of selected tables in DB1.

You need to use an Azure Synapse pipeline to copy data from the selected tables in DB1 to the files in

storage1. The solution must meet the following requirements:

• The Copy activity in the pipeline must be parameterized to use the data in File1.txt to identify the

source and destination of the copy.

• Copy activities must occur in parallel as often as possible.

Which two pipeline activities should you include in the pipeline? Each correct answer presents part

of the solution. NOTE: Each correct selection is worth one point.

Options

Q: 3

You are developing a solution that will stream to Azure Stream Analytics. The solution will have both

streaming data and reference data.

Which input type should you use for the reference data?

Options

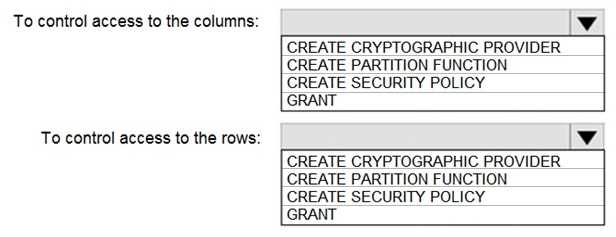

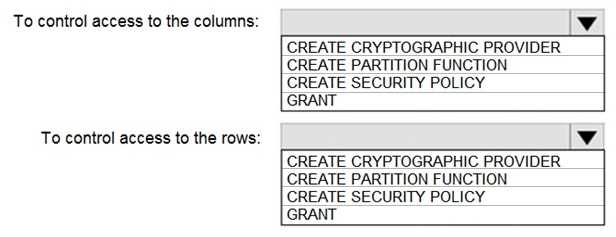

Q: 4

HOTSPOT

You have an Azure subscription that contains the following resources:

An Azure Active Directory (Azure AD) tenant that contains a security group named Group1

An Azure Synapse Analytics SQL pool named Pool1

You need to control the access of Group1 to specific columns and rows in a table in Pool1.

Which Transact-SQL commands should you use? To answer, select the appropriate options in the

answer area.

Your Answer

Q: 5

You need to design a data retention solution for the Twitter feed data records. The solution must

meet the customer sentiment analytics requirements.

Which Azure Storage functionality should you include in the solution?

Options

Q: 6

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1.

You have files that are ingested and loaded into an Azure Data Lake Storage Gen2 container named

container1.

You plan to insert data from the files into Table1 and azure Data Lake Storage Gen2 container named

container1.

You plan to insert data from the files into Table1 and transform the dat

a. Each row of data in the files will produce one row in the serving layer of Table1.

You need to ensure that when the source data files are loaded to container1, the DateTime is stored

as an additional column in Table1.

Solution: You use a dedicated SQL pool to create an external table that has a additional DateTime

column.

Does this meet the goal?

Options

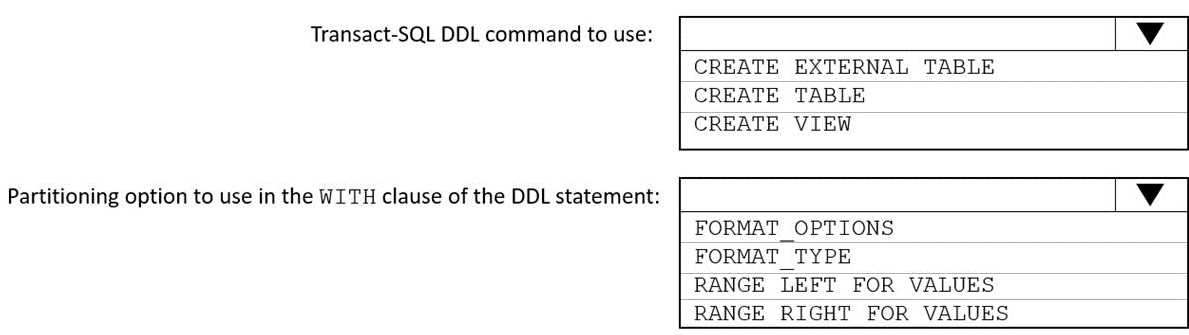

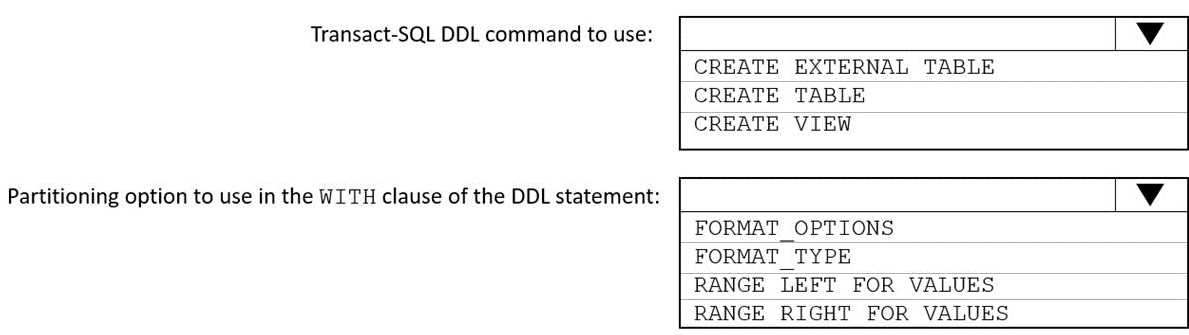

Q: 7

HOTSPOT

You need to implement an Azure Synapse Analytics database object for storing the sales transactions

dat

a. The solution must meet the sales transaction dataset requirements.

What solution must meet the sales transaction dataset requirements.

What should you do? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Your Answer

Q: 8

You plan to implement an Azure Data Lake Storage Gen2 container that will contain CSV files. The

size of the files will vary based on the number of events that occur per hour.

File sizes range from 4.KB to 5 GB.

You need to ensure that the files stored in the container are optimized for batch processing.

What should you do?

Options

Q: 9

You have an Azure Synapse Analystics dedicated SQL pool that contains a table named Contacts.

Contacts contains a column named Phone.

You need to ensure that users in a specific role only see the last four digits of a phone number when

querying the Phone column.

What should you include in the solution?

Options

Q: 10

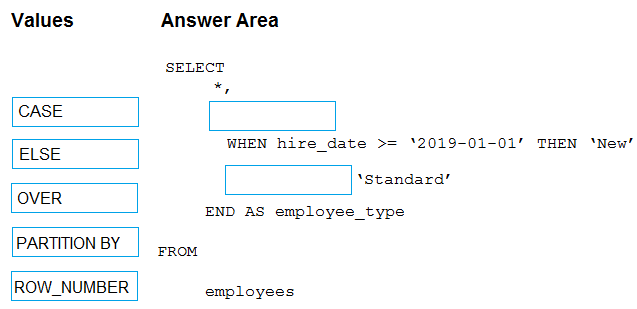

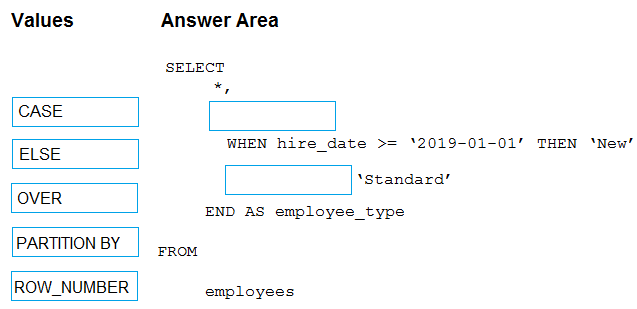

DRAG DROP

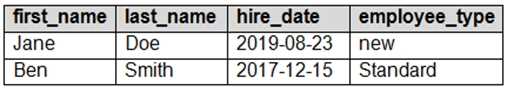

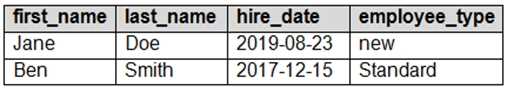

You have the following table named Employees.

You need to calculate the employee_type value based on the hire_date value.

How should you complete the Transact-SQL statement? To answer, drag the appropriate values to the

correct targets. Each value may be used once, more than once, or not at all. You may need to drag

the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

You need to calculate the employee_type value based on the hire_date value.

How should you complete the Transact-SQL statement? To answer, drag the appropriate values to the

correct targets. Each value may be used once, more than once, or not at all. You may need to drag

the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

You need to calculate the employee_type value based on the hire_date value.

How should you complete the Transact-SQL statement? To answer, drag the appropriate values to the

correct targets. Each value may be used once, more than once, or not at all. You may need to drag

the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

You need to calculate the employee_type value based on the hire_date value.

How should you complete the Transact-SQL statement? To answer, drag the appropriate values to the

correct targets. Each value may be used once, more than once, or not at all. You may need to drag

the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Your Answer

Question 1 of 10