AI-900.pdf

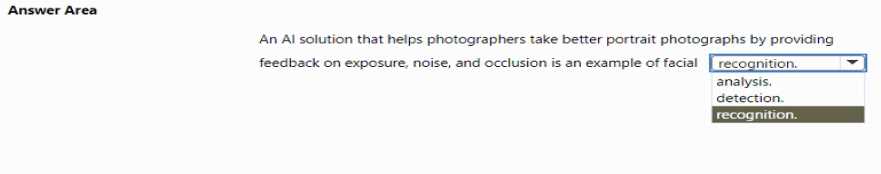

HOTSPOT Select the answer that correctly completes the sentence

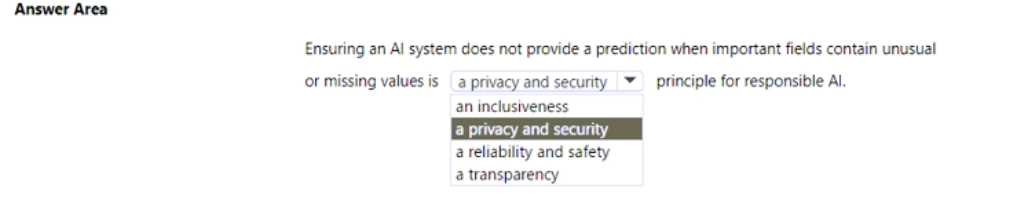

HOTSPOT brectly completes the sentence.

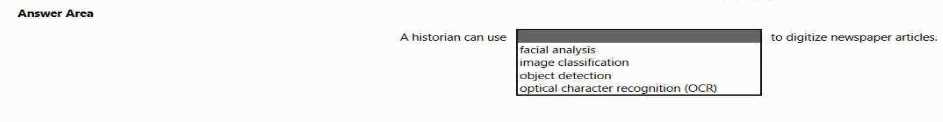

HOTSPOT Select the answer that correctly completes the sentence.

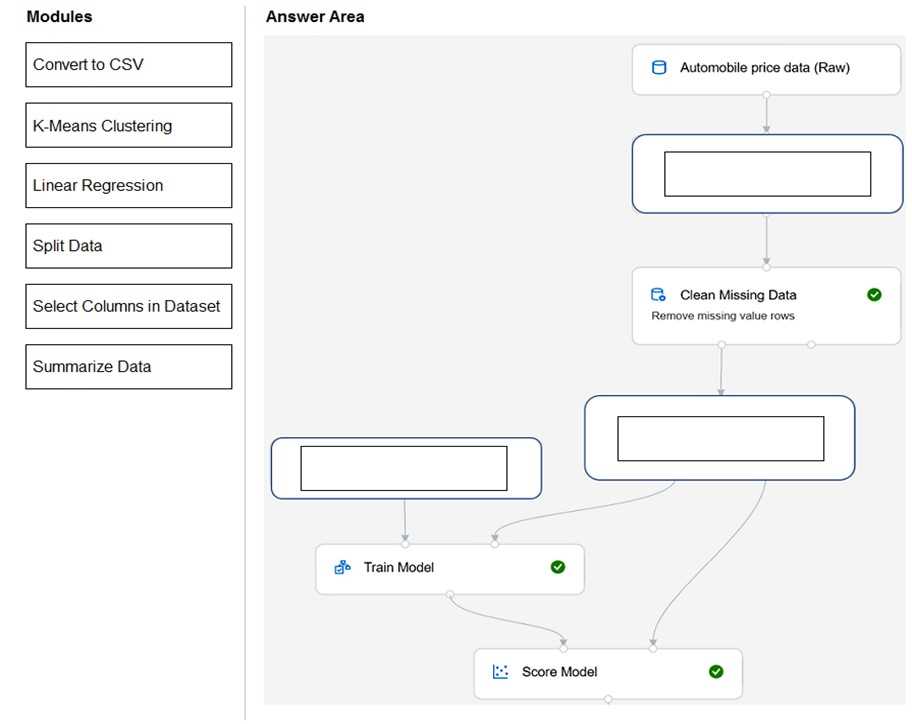

DRAG DROP You need to use Azure Machine Learning designer to build a model that will predict automobile prices. Which type of modules should you use to complete the model? To answer, drag the appropriate modules to the correct locations. Each module may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

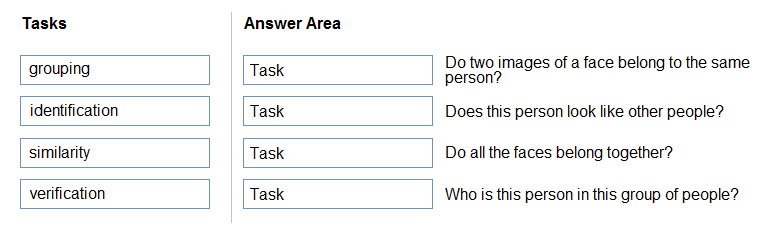

DRAG DROP Match the facial recognition tasks to the appropriate questions. To answer, drag the appropriate task from the column on the left to its question on the right. Each task may be used once, more than once, or not at all. NOTE: Each correct selection is worth one point.

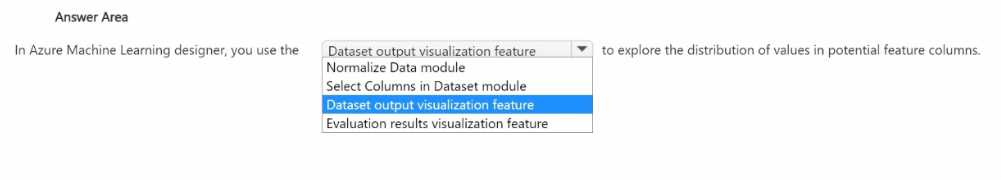

HOTSPOT To complete the sentence, select the appropriate option in the answer area.